Copyright © Michael Richmond.

This work is licensed under a Creative Commons License.

Copyright © Michael Richmond.

This work is licensed under a Creative Commons License.

We live in a world full of electronic gadgets. Smartphones, big-screen television sets, GPS receivers. Every year, they seem to get bigger and better.

Most astronomers today, both professional and amateur, use electronic detectors to take astronomical images. Is there any doubt that our current cameras are bigger and better than any used by scientists decades ago?

Let me try to convince you that, if we choose one reasonable manner of measuring the quality of a detector, it is only now -- or, perhaps, in the past five years -- that modern electronic detectors have finally caught up to the good old photographic plate.

Contents

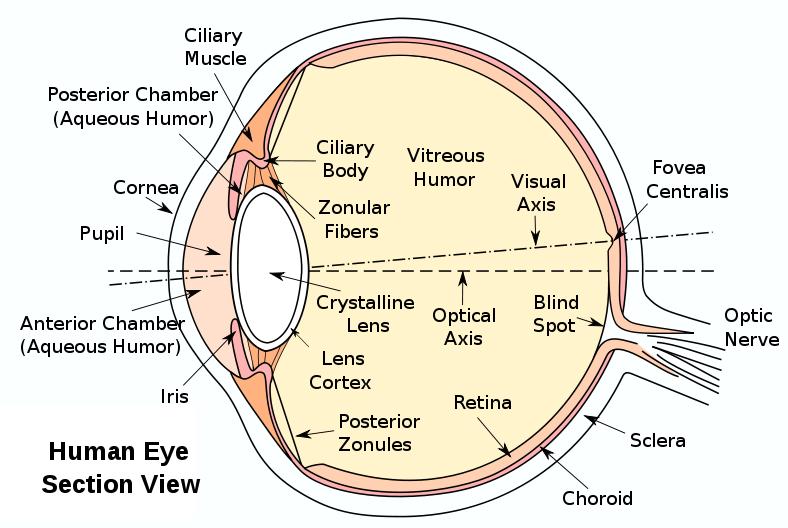

For many tens of thousands of years, the only detector used by humans was the good old Mark I eyeball.

Image courtesy of

Jezebel.com

The eye is more than just a detector -- it includes all the optics you need, too!

Image courtesy of

Online-Utility.org

The eye focuses light rays onto light-sensitive cells in the retina, along the back surface of the eyeball. When enough photons (roughly 6 photons within a span of 0.1 second) strike a set of these cells, a signal is sent through the optic nerves to the brain.

How well can the eye make quantitative measurements of stellar brightness? With training and experience, and given good conditions, humans can measure the magnitude of star to a precision of a few percent.

| quantum efficiency | integrate? | permanent? | linear? | precision | ease of use | size | |

| Eye | 6 % | no | no | no | 2 % | simple | 36 sq. mm |

In the middle of the nineteenth century, chemists and artists figured out that it was possible to focus light onto a specially prepared film of material which could record the image permanently. The early photographic techniques had very low sensitivity to light -- requiring long exposure times to build up a good picture (that's why the people in early photographs are posed so stiffly). The first photograph of the Moon, a daguerreotype taken in 1840, required Henry Draper to expose the plate for 20 minutes!

Image courtesy of

Lights in the Dark and Prof. Baryd Still, NYU Archives

As the decades passed, chemists developed materials which were much more sensitive to light, enabling photographers to take images with exposure times of one second or less. Henry Draper kept working on his astrophotographical skills; in 1863, he took a photograph which was considered the best picture of the Moon for decades:

Image courtesy of

Hastings Historical Society

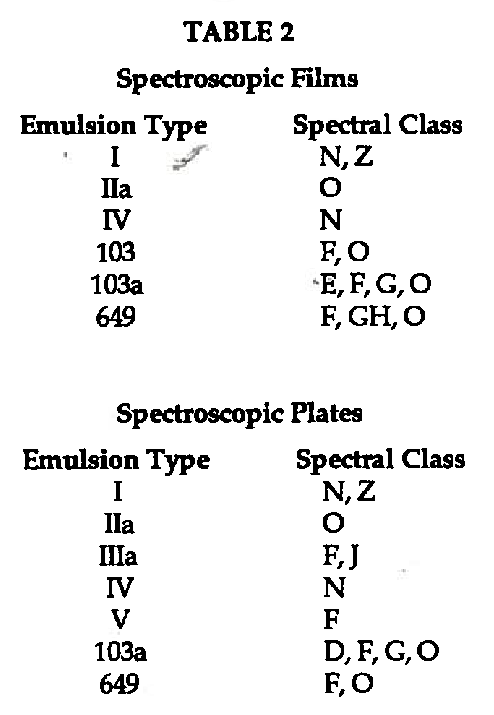

Special emulsions were developed for astrophotography which were especially sensitive to low light levels. Do you recognize any of these names?

Taken from "Kodak Scientific Imaging Products",

Kodak Publication L-10, 1987.

Photography offered two big advantages over the human eye:

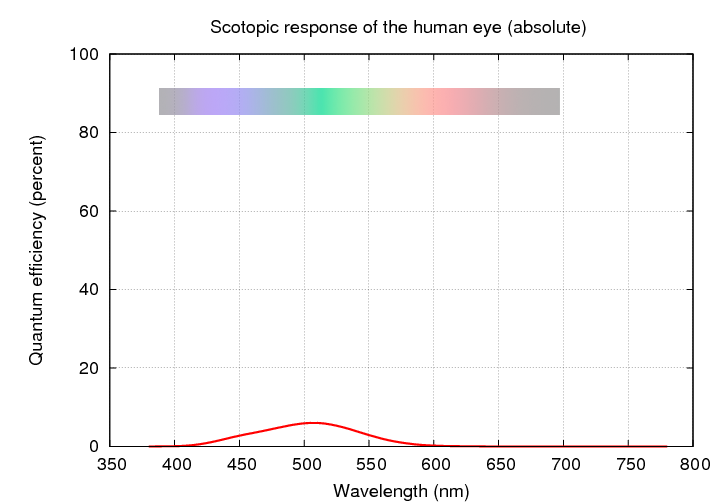

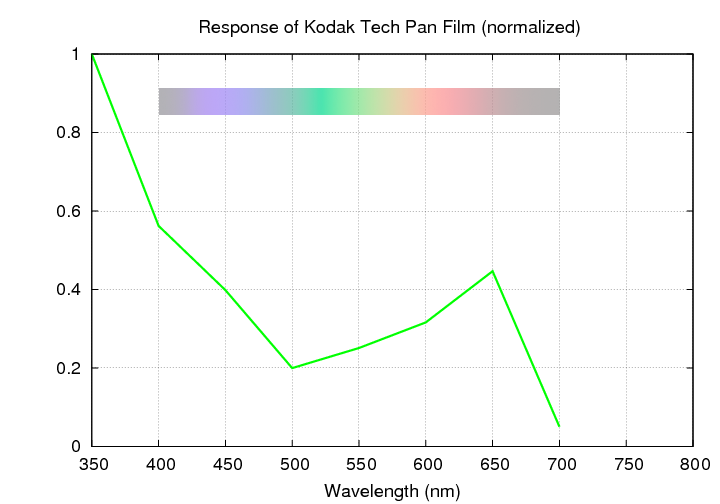

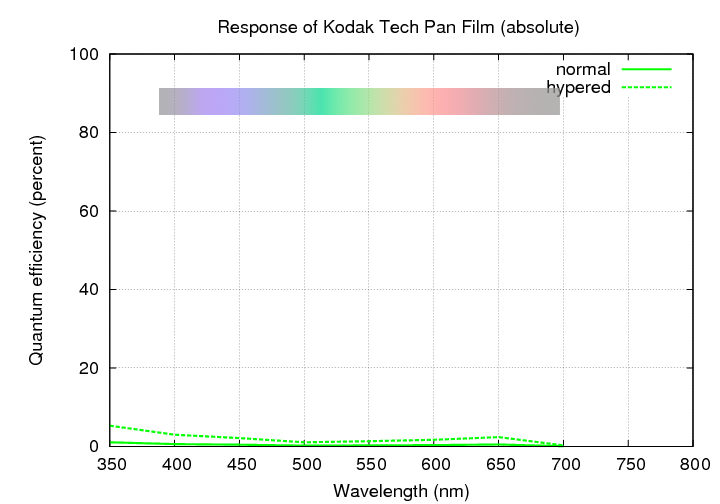

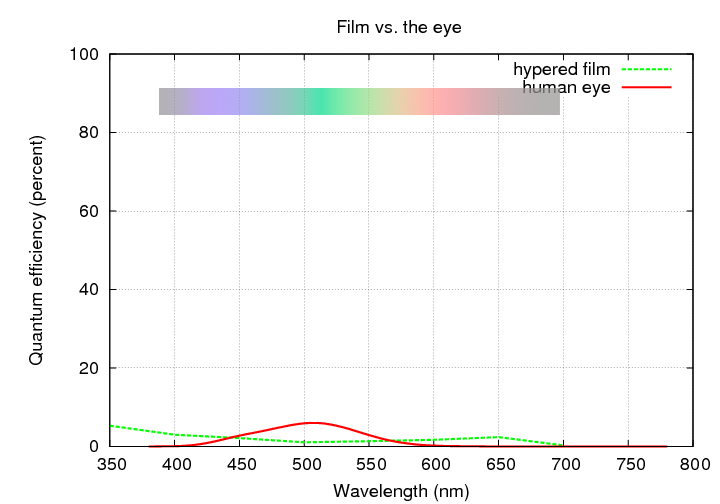

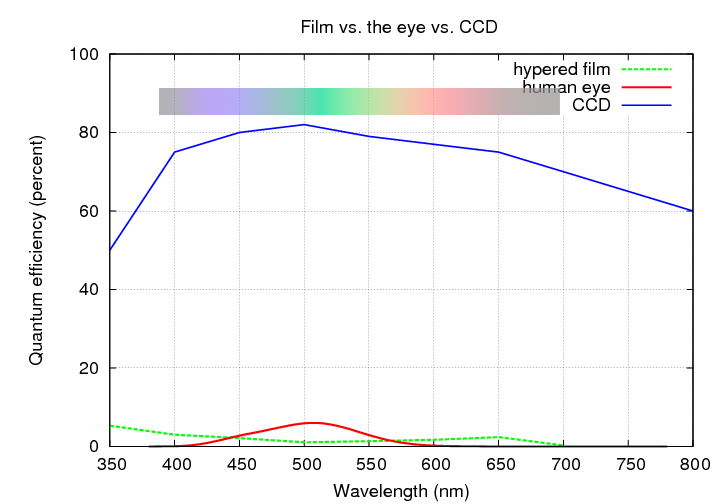

However, even the best photographic emulsions record only a tiny fraction of all the light that strikes them. Pay attention to the second of these graphs.

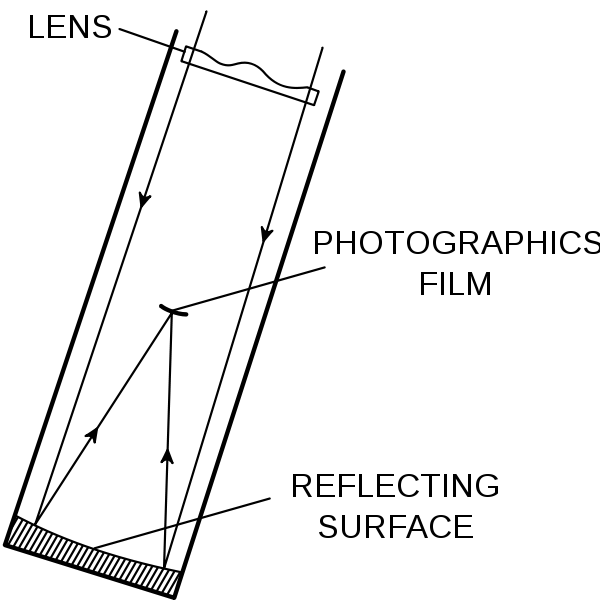

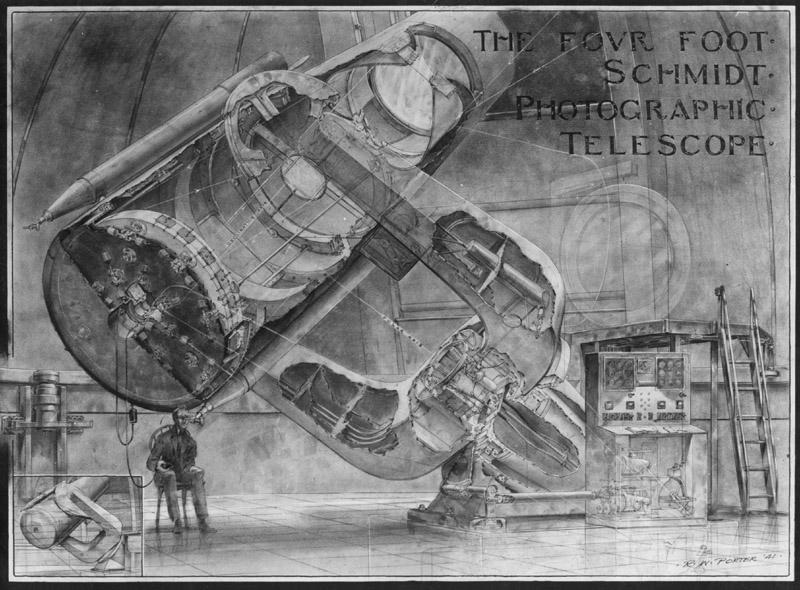

As you can see from the films which we are passing out to the audience now, another big advantage film offered to astronomers was its size: one could (with very careful fabrication techniques) spread photographic emulsion over very large areas, over a backing of glass (plates) or plastics (film). Opticians like Bernhard Schmidt designed special telescopes which could project sharp images covering wide areas of the sky onto large photographic plates.

Image courtesy of

Wikipedia and Krzysztof Zajączkowski

You may recognize this particular Schmidt Telescope: it was used to create the Palomar Observatory Sky Survey between 1949 and 1958 and the Second Palomar Observatory Sky Survey between 1986 and 1999. Similar telescopes in Chile (ESO 1-m Schmidt Telescope) and Australia (UK 1.2-m Schmid Telescope) were used in the 1970s to create a photographic survey of the southern skies.

This drawing by Russell Porter is copyright

California Institute of Technology's Palomar Observatory.

Starting around 1990, astronomers greatly increased the utility of the big photographic sky surveys by scanning the photographs and digitizing the results. Using specially built software, they detected and measured the position and brightness of hundreds of millions of stars and galaxies. After a great deal of careful calibration, these objects were placed into catalogs and made available to people around the world.

Astronomers have many tools, such as Aladin, for accessing these catalogs interactively.

How well can these digitized photographs measure the brightness of stars? The big surveys yield stellar magnitudes with a precision of around 6-8 percent at best, and perhaps 10-15 percent more typically.

| quantum efficiency | integrate? | permanent? | linear? | precision | ease of use | size | |

| Eye | 6 % | no | no | no | 2 % | simple | 36 sq. mm |

| Photograph | 1 - 5 % | yes | yes | no | 8 % | needs developing | 122,000 sq. mm |

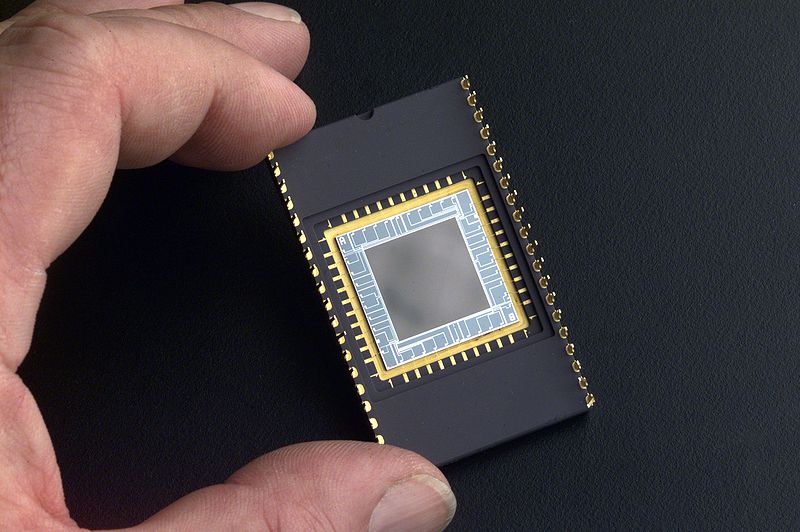

In 1970, scientists at Bell Labs were thinking about ways to store information in solid-state memory. They came up with the idea of a silicon chip divided into an array of small regions, then moving electric charges into and out of the array. By varying voltages applied to small sections of the chip, the user could couple the charge to specific regions. These chips became known as charge coupled devices, or CCDs.

It turns out that silicon has many interesting properties: one is its ability to convert individual photons of light into individual electrons. People soon realized that with a little preparation, CCDs could be used to convert light into an image, and then to transfer that image digitally to a computer. Because the devices were novel and expensive, the first applications were in space: astronomers suggested using CCDs for the cameras aboard the Galileo mission to Jupiter, and on the orbiting telescope we now call the Hubble Space Telescope. As years passed, many companies started to fabricate CCDs for industrial applications and the prices dropped. Even ground-based astronomers -- at first professional, but then amateur -- were able to afford these new imaging devices.

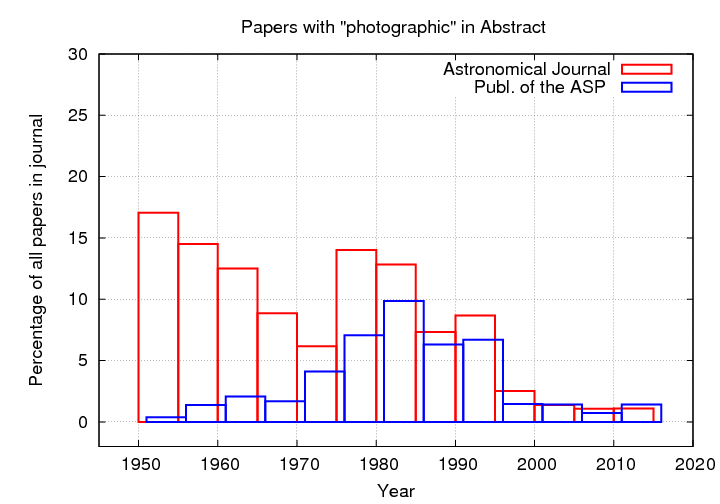

Q: When did CCDs really take over in the

community of professional astronomers?

A. around 1970

B. around 1980

C. around 1990

Watch as the fraction of papers with the word photographic in the abstract slowly decreases ....

... while the fraction with CCD in the abstract increases.

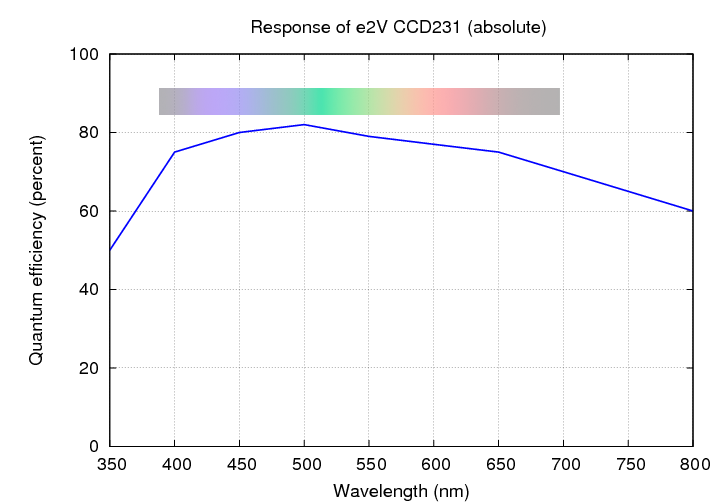

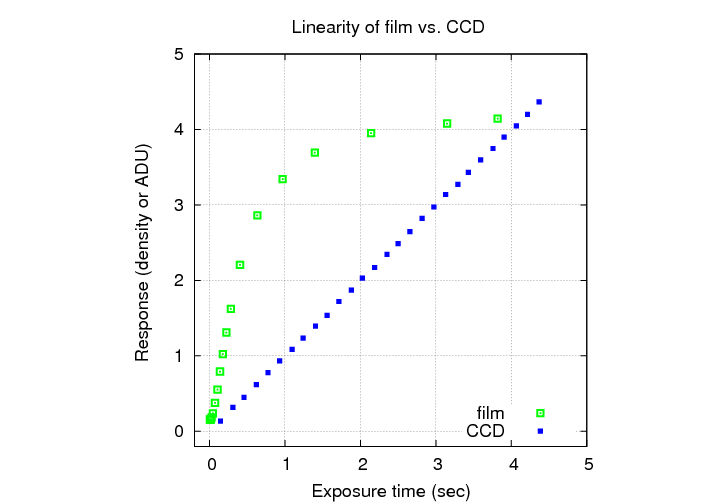

These silicon-based detectors had two big advantages photographic film

The "sensitivity" part is easy to understand,

but what does the "linear" part mean? It means that if one exposes the detector for twice as many seconds, one OUGHT to record a signal twice as large.

How well can CCDs measure the brightness of stars? The answer really depends on the amount of work one is willing to put into the observing procedure and the analysis. It's easy to reach a precision of one percent for differential measurements, and we have reached 0.3 percent at the RIT Observatory with our little telescopes. The best ground-based measurements can go down to around 0.05 percent.

You may have noticed that size is listed in the tables above. It might seem to be a simple matter -- a bigger detector will be able to measure light over a wider field, so bigger must mean better. Right?

Well, almost. What we really want is the ability to record as much fine detail as possible. If two detectors can distinguish details at the same physical scale, then, sure, the bigger one will capture more information. But what if they differ in the way they respond to light?

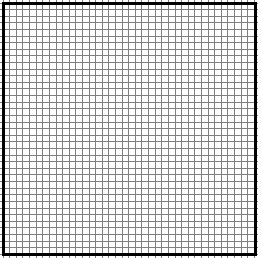

Consider the two detectors shown below. Detector A is twice as large as detector B.

Q: Which will record more information?

Detector A Detector B

Let's find out. Below are two pictures of the same region of the Moon, taken with the same optical setup, but two different detectors.

Detector A Detector B

Want to see the original image?

What really matters is a combination of the size of the detector AND the size of one of its resolution elements. A resolution element (sometimes called a "pixel") is simply the smallest region of the detector which can respond as a single unit to incoming light. We need to compute this combination:

physical size of detector (mm)

number of "pixels" = ------------------------------

size of resolution element (mm)

Let's look at the CCD: a new camera for the WIYN 0.9-m telescope has a chip made by e2V, model 231-84. It is relatively large by current astronomical standards:

physical size of detector (mm) 61.44 mm ------------------------------ = ------------ = 4096 size of resolution element (mm) 0.015 mm

The chip is square, so the number of resolution elements is 4096 x 4096 = 16 million, if we round off a bit.

Now, photographic emulsion (like CCDs) comes in many varieties,

each of which has somewhat different properties.

The emulsions used in astronomical applications

such as the Palomar Observatory Sky Surveys had relatively

coarse grains. The smallest dark spot which forms in

response to light consists of a small number of grains

and is roughly 5 to 10 microns in diameter.

If we adopt the larger size for our calculation,

then a plate 14 inches on a side has

physical size of detector (mm) 355.6 mm

------------------------------ = ------------ = 35,560

size of resolution element (mm) 0.010 mm

The number of resolution elements is 35,560 x 35,560 = 1.3 billion, if we round off a bit. That is nearly 100 times more information than the big CCD!

What about the human eye? How does it compare using this criterion? Well, there's good news and bad news.

All the following can only be approximate values, since individual human eyes vary widely.

physical size of detector (mm) 12 mm

------------------------------ = ------------ = 6000

size of resolution element (mm) 0.002 mm

Using this value, we would estimate the eye to contain roughly 6,000 x 6,000 = 36 million resolution elements, similar to a very large modern CCD.

Moreover, several rod cells will send their signals to a single neuron, especially in the outer regions of the retina. So, even though each rod cell may record the light which strikes it alone, the brain will receive a single signal which represents the mix of responses from a number of cells. This reduces the number of resolution elements considerably.

To get some idea for the change in visual acuity as a function of position on the retina, consider this pretty false-color picture of the Moon (red means "high altitude", blue means "low altitude").

Click on the image to activate eye-o-vision

The total number of axons in the optic nerve is roughly 1 million, which can serve as an estimate of the number of resolution elements in the eye. That's quite a bit smaller than one would expect from the analysis above, and it definitely moves the eye into third place in the "number of resolution elements" contest.

We've seen that there are a number of factors by which one can compare astronomical detectors. Let's see if we can find a way to combine several of these factors and devise a single metric.

You have exactly one hour to capture as much information about the Orion Nebula region as you can.

The information we collect depends on

photograph 356 mm x 356 mm 1300 million resolution elements

CCD 61 mm x 61 mm 16 million resolution elements

eye 12 mm x 12 mm 1 million resolution elements

photograph 3 percent of light recorded

CCD 70 percent of light recorded

eye 6 percent of light recorded

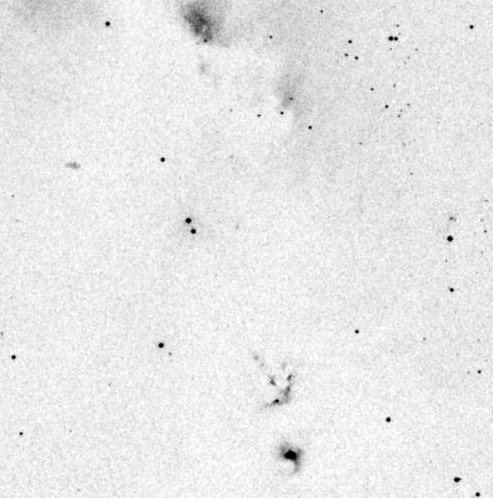

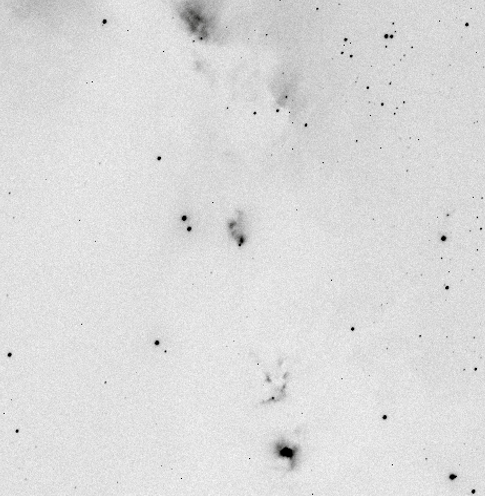

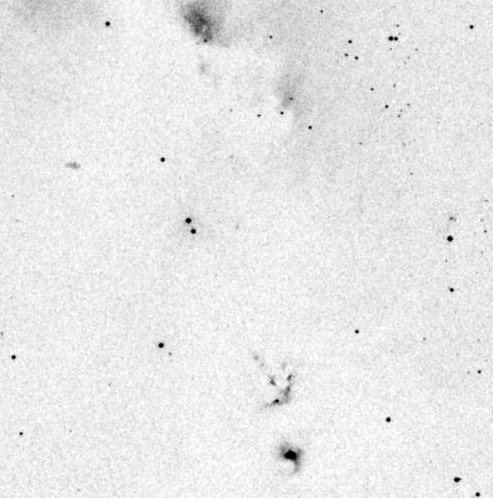

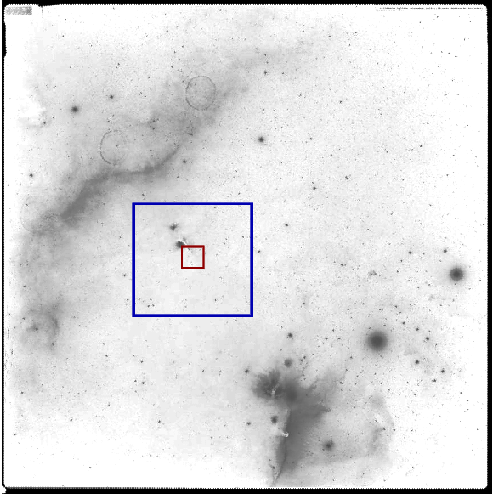

If we are interested in a small region of the sky -- say, the area around McNeil's Nebula -- then the CCD is a better choice. Compare these views taken with a LONG exposure on film (on the left) and a SHORT exposure on a CCD (on the right). In order to reach the same depth, we must expose the film for roughly 17 times longer.

Left: 48-inch Palomar Schmidt, 50-minutes on 103aE; Right: 36-inch WIYN, 5 minutes S2KB CCD in R

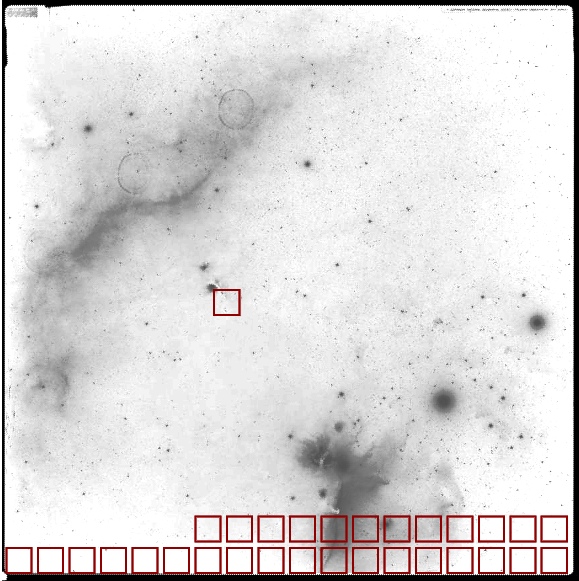

On the other hand, if we are interested in a wide area on the sky, film is a better choice. The picture above shows nearly the entire the entire CCD image; but it is only a small portion of the entire photographic plate.

A REALLY small portion of the entire photographic plate ...

In order to cover the same area on the sky with a CCD, we would need to take many more exposures, shifting the telescope a bit each time:

So, in the battle between a photographic plate and a single CCD chip, we must compare the longer exposure time for the plate against the multiple exposures required for the CCD. If we use the numbers from our table above , we can compute an overall efficiency for each detector: it's the size (large is better) multiplied by the sensitivity (again, large is better).

photographic plate single CCD

size 122,000 sq. mm 1,800 sq. mm

sensitivity x 3 percent x 70 percent

-----------------------------------------------------

"efficiency" 3,600 sq. mm 1,260 sq. mm

Hmmm. By this measure, the photographic plate is the winner. Note that we haven't included the readout time for the CCD, which increases the time required for it to take multiple exposures.

Surprisingly enough, when it comes to recording large areas of the sky, the venerable photographic plate can be more efficient than one CCD.

Yes, yes, I've ignored the factors of linearity and dynamic range, which favor the CCD.

But -- what if we put several CCD chips into a single camera to create a mosaic?

One of the first mosaic cameras was created for the Sloan Digital Sky Survey (SDSS for short). The design was a bit unusual: the CCDs were arranged into 6 columns, each of which had a series of 5 chips with different filters. Instead of tracking the stars exactly, the SDSS telescope would move at a slightly non-sidereal rate in a carefully calculated direction; as a result, stars would drift slowly across the camera, along these columns. In less than ten minutes, the camera would collect an image of each object in the five filters, allowing scientists to measure the color of celestial objects.

| name | # of CCDs | # of pixels | area | first* use |

| SDSS | 30 | 126 million | 72,600 sq. mm | 1998 |

The Canada-France-Hawaii Telescope (CFHT), built in 1979, has a mirror 3.6 meters in diameter. It sits atop Mauna Kea and is designed to perform well in the infrared as well as the optical. In 2003, astronomers installed a very large camera called MegaCAM in order to carry out large-scale surveys of the sky. You can examine the data collected by this instrument by visiting the CFHT Legacy Survey archive site. You can also browse the CFHT Deep Field #1 with your browser -- there are just too many pixels to show on the screen at once!

| name | # of CCDs | # of pixels | area | first* use |

| SDSS | 30 | 126 million | 72,600 sq. mm | 1998 |

| MegaCAM | 36 | 340 million | 62,000 sq. mm | 2003 |

OmegaCAM is a 36-chip mosaic designed for use on the wide-field VLT Survey Telescope, a 2.6-m telescope in the mountains of Chile. Each of its chips has 2K-by-4K pixels, so the entire instrument contains 16K-by-16K = 288 megapixels. The total area of the camera is 260 x 260 mm, which translates into 1 x 1 degree on the sky.

| name | # of CCDs | # of pixels | area | first* use |

| SDSS | 30 | 126 million | 72,600 sq. mm | 1998 |

| MegaCAM | 36 | 340 million | 62,000 sq. mm | 2003 |

| OmegaCAM | 36 | 288 million | 60,000 sq. mm | 2011 |

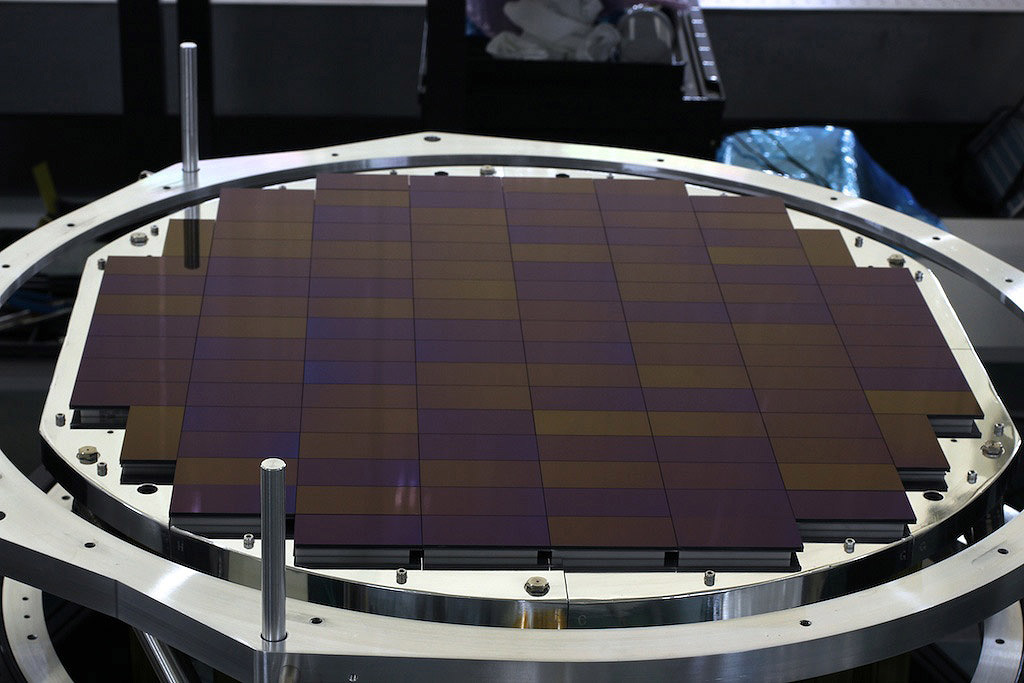

The next stop on our tour of mosaic CCD cameras is Hyper-SuprimeCam -- which takes over for regular old SuprimeCam -- which sits at the prime focus of the Subaru 8.3-meter Telescope on Mauna Kea. By packing over 100 specially designed CCD chips together into an area over 2 feet wide, scientists are able to cover a field of view roughly 1.5 degrees wide in a single shot.

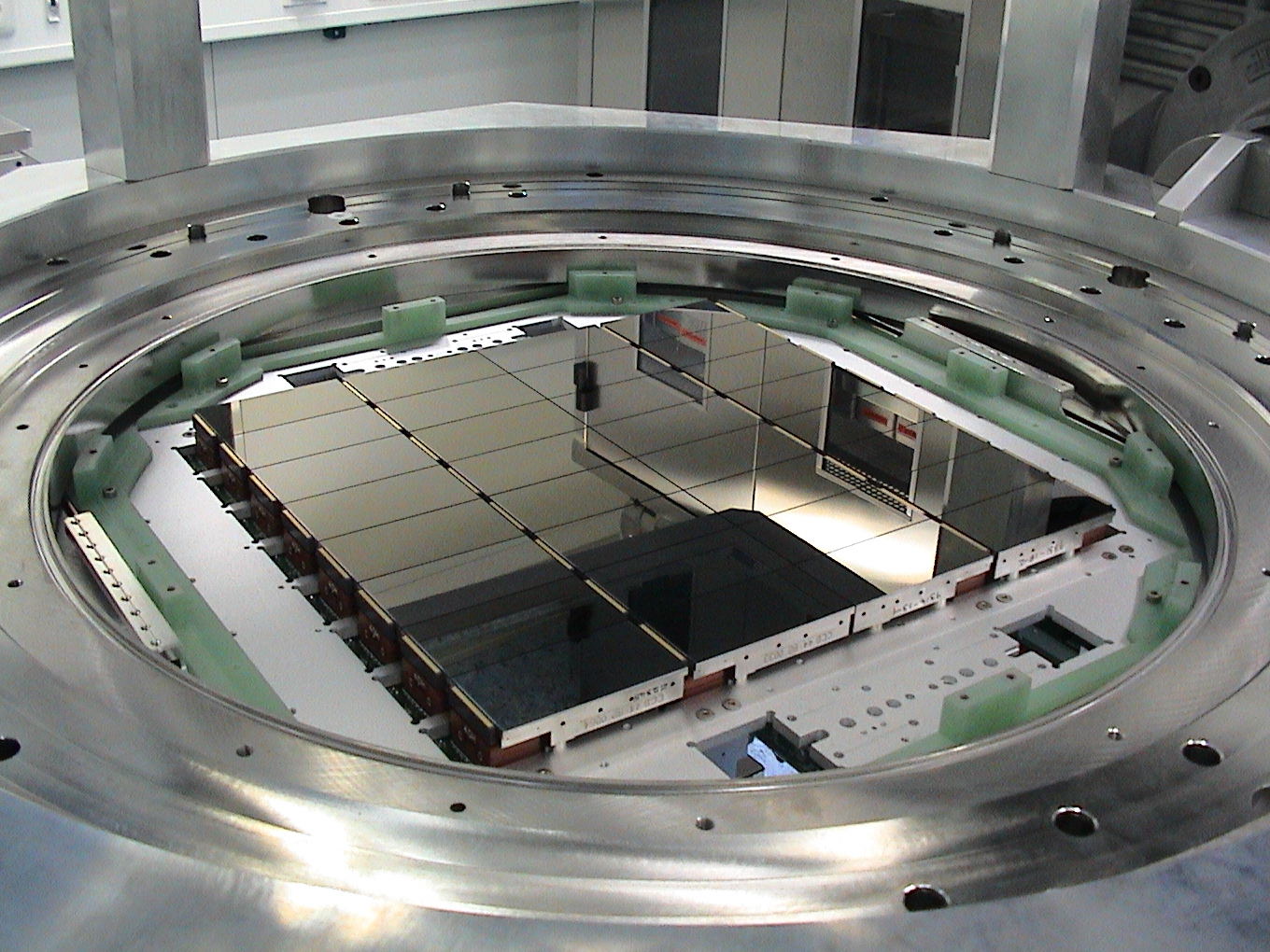

Recently, the LSST observatory began its commissioning operations. Its mosaic camera contains 189 Teledyne e2V CCD250-82 sensors, each 4K-by-4K, for a total of about 3.2 billion pixels. The field of view is about 3.5 degrees in diameter, encompassing 9.6 square degrees of sky in each exposure.

Image courtesy of

Vera C. Rubin Observatory

| name | # of CCDs | # of pixels | area | first* use |

| SDSS | 30 | 126 million | 72,600 sq. mm | 1998 |

| MegaCAM | 36 | 340 million | 62,000 sq. mm | 2003 |

| OmegaCAM | 32 | 268 million | 60,000 sq. mm | 2011 |

| Hyper-SuprimeCam | 116 | 973 million | 210,000 sq. mm | 2013 (?) |

| LSST | 189 | 3.2 billion | 310,000 sq. mm | 2025-ish |

So, we have finally reached a point at which electronic detectors have not only matched, but passed photographic plates in their ability to record large volumes of information in a single exposure. Recall from our earlier calculations that the large photographic plates used in the 1950s for the Palomar Observatory Sky Survey had roughly 1.3 billion resolution elements. The LSST mosaic CCD camera has more than twice as many -- 3.2 billion -- and records much more of the light which strikes it.

Copyright © Michael Richmond.

This work is licensed under a Creative Commons License.

Copyright © Michael Richmond.

This work is licensed under a Creative Commons License.