The question above came from a woman who was dissatisfied with the pictures from her daughter's wedding. Why, she wondered, did the pictures seem to alter her appearance?

There might be several reasons, in general, for a person to appear "fatter" in photographs than to the eye: the type of lens used to take the picture, details in the background, apparel, stance and pose, etc. But I can come up with one reason which certainly must contribute to the effect: the fact that a camera has but a single point of view, while a human sees things with two eyes. Let me try to explain.

Suppose we set an object in front of a distant background. If we take a picture of the object with a camera, the left and right edges of the object will appear to cover a certain range of the background.

On the other hand, if we replace the camera with a person, we change the view. Humans have two eyes, separated by about 7 or 8 centimeters. Our brains take as input the image from each eye and fuse them together into a single picture. One result of this binary input is a sense of depth: we can estimate how distant an object is from us. Another, less familiar, result is the change in apparent width of the object, as our brain includes the left eye's view of the background behind the left edge, and the right eye's view of the background behind the right edge:

In real life, when we focus on a nearby object, we usually don't pay attention to details in the background. However, when we examine a photograph, we may more easily compare objects in the foreground to those in the background (unless the photographer has take care to throw the background far out of focus).

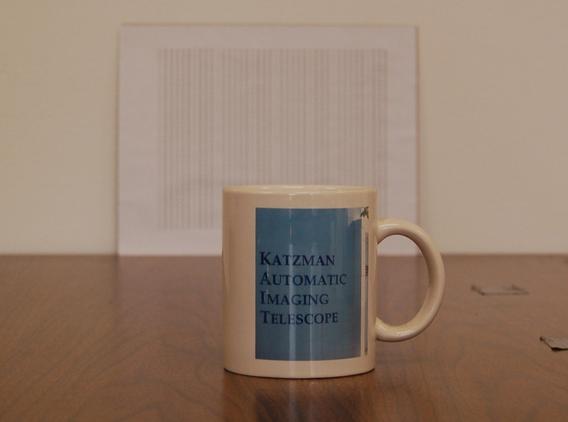

Let me illustrate this theory with some actual pictures. I placed a coffee mug onto a table, and taped a piece of paper with some regular columns of numbers to the wall a few feet behind it. Here's a picture, taken from directly in front of the mug. Not very interesting, I'm afraid.

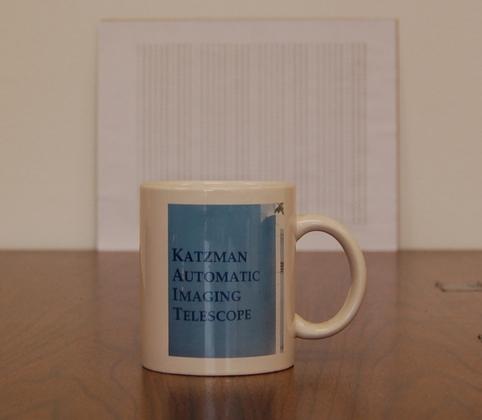

I then moved the camera 4 cm to the left, to the position of a human's left eye would occupy if his nose was directly at the center. As you can see, a picture taken from this vantage shifts the mug relative to the background.

And here's a picture taken from the vantage of the right eye, 4 cm to the right of the center.

How to compare the camera's one-eyed perspective to a live human's two-eyed view? My solution was to merge the left-eye and right-eye pictures togethe in the following manner: I sliced each picture vertically at a location which was exactly at the center of the mug in that picture; then, I spliced together the right-hand half of the right eye's picture with the left-hand half of the left eye's picture.

Once again, here's the central view of the mug ...

... and here's the fused image from the left- and right-eye perspectives. You can see that the two halves did not quite match up properly.

One way to compare the two views is by viewing sections of each placed right next to each other, like this (camera view at top, two-eye view below):

The mug in each picture stretches across exactly the same number of pixels ... but the BACKGROUND is very different. In the combined two-eye view, the background appears much wider than in the camera view. So, even though the mug is really the same width in each, it will look "fatter" relative to the background in the camera view.

Perhaps it's easier to appreciate this difference if one blinks the two pictures. Does the mug appear to change its width when you look at the picture below? (If the picture is not blinking, then click here to watch a blinking version. )